What issue can we solve for you?

Type in your prompt above or try one of these suggestions

Suggested Prompt

Insight

Building Large Language Model Operations (LLMOps) on AWS Technology Stack

Building Large Language Model Operations (LLMOps) on AWS Technology Stack

How to deploy Gen AI solutions at scale without spending excessive time and money assembling tools and solutions from various vendors.

AI is expected to contribute $320 billion to the MENA economy by 2030, with annual growth rates of 20% to 34%. The UAE and Saudi Arabia are leading the region in AI investments and initiatives. Saudi Arabia has launched a $40 billion fund to back AI initiatives, while the UAE has set up MGX, an investment firm focused on AI infrastructure with potential assets under management of over $100 billion. Saudi Vision 2030 and the UAE's National AI Strategy 2031 are key drivers of AI adoption in the region. There's a growing focus on developing Arabic language models and region-specific AI applications.

While adoption is high, many organizations are still in the early stages of realizing value from Gen AI investments. One of the emerging themes across enterprises is a cloud-native stack for enterprise-level Gen AI initiatives and a growing need for robust and scalable processes to ensure the effective and reliable operation of LLMs. CIOs and CTOs are grappling with the complex challenge of selecting and implementing the right tooling to support their organization's Gen AI initiatives within the LLMOps framework. They must navigate a rapidly evolving landscape of technologies and methodologies while ensuring their choices align with both immediate needs and long-term strategic goals. This decision-making process is further complicated by the need to balance innovation with practicality, cost-effectiveness, and scalability across the entire LLMOps lifecycle. This paper examines AWS capabilities across the LLMOps ecosystem (with a focus on the model usage side of LLMOps) and highlights practical considerations for some of the common themes around Gen AI.

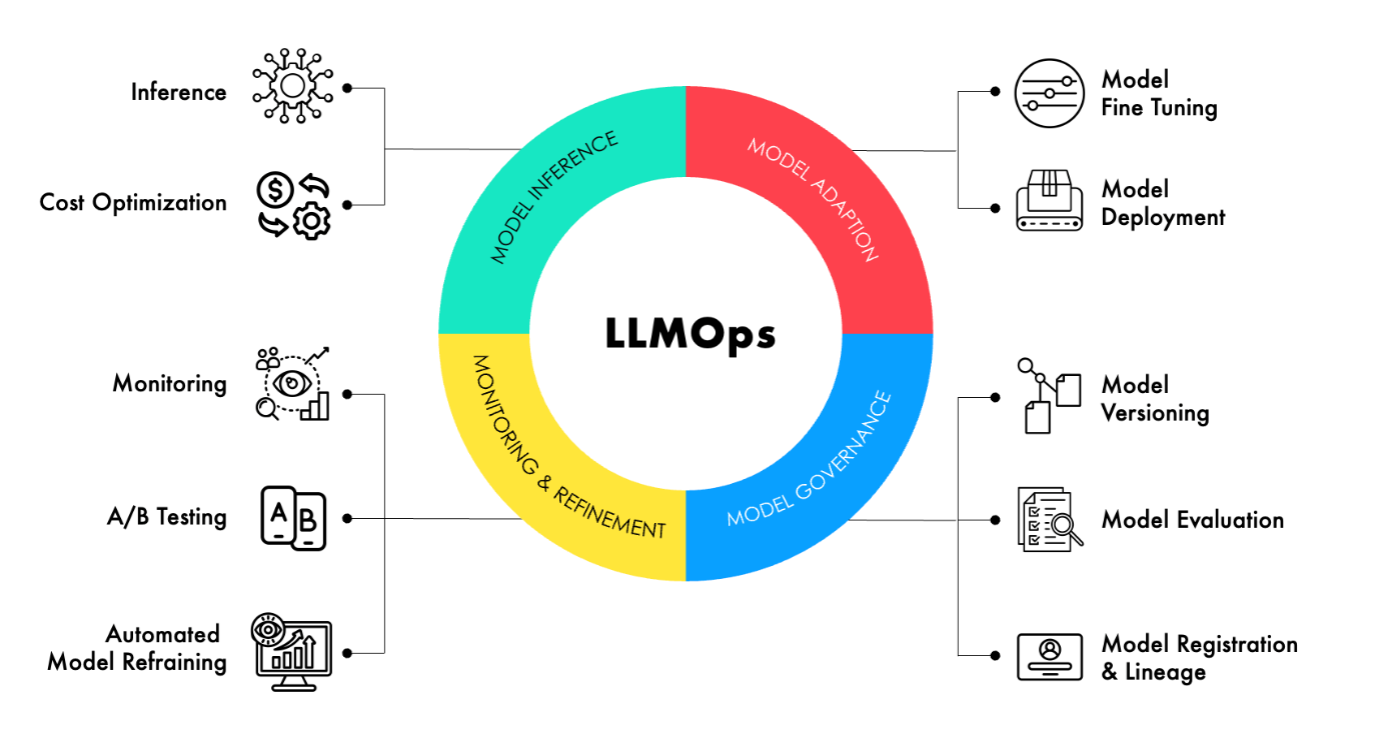

LLMOps involve model training, fine-tuning, deployment, monitoring and management of LLMs and their resources.

AWS, with its global scale across industries, is innovating rapidly across the above areas of LLMOps and has industry-leading capabilities:

- Comprehensive set of data services that covers length and breadth of data governance, data cataloging, cleansing, ingestion, processing, storage, querying and visualization of data.

- Purpose-built infrastructure to run AI workloads, including training foundation models and producing inferences (e.g., AWS Trainium, AWS Inferentia, Amazon SageMaker)

- Tools and pre-trained foundation models to build and scale generative AI-powered applications (e.g., Amazon Bedrock)

- Applications with built-in generative AI that do not require any specific expertise in machine learning (e.g., Amazon Q)

When it comes to choosing an LLM, there are typically three broad paths:

- Building the foundation model from scratch,

- Fine-tuning a pre-trained model,

- Using an off-the-shelf model.

This paper covers only the model usage side of LLMOps, primarily addressing the needs of a target audience who are expected to be LLM fine-tuners and model buyers rather than builders.

Amazon Bedrock is a comprehensive platform providing access to a variety of Foundation Models (FMs) from external providers as well as Amazon's own Titan models. With this selection of FMs and their applicability to different types of use cases, you can easily test the performance of various models on Bedrock. It allows accessing the FMs using an API and integrating LLM capabilities into your development environment. Amazon Bedrock ensures that customer private data is not shared with third parties or with Amazon's internal development teams. Custom Model Import on Amazon Bedrock helps organizations bring their proprietary models to Bedrock, reducing operational overhead and accelerating application development. For traditional ML models, Amazon SageMaker JumpStart offers a great pre-trained model library to leverage.

Model Adaptation and Deployment

Foundation models can be fine-tuned for specific tasks using private labeled datasets in just a few steps. Amazon Bedrock supports fine-tuning for a range of FMs. A second way some models (Amazon Titan Text models in particular) can be adapted is using continued pre-training with unlabeled data for specific industries and domains. With fine-tuning and continued pre-training, Amazon Bedrock makes a separate copy of the base FM that is accessible privately, and the data is not used to train the original base models. The FMs can be equipped with up-to-date proprietary information using Retrieval Augmented Generation (RAG), a technique that involves fetching data from your own data sources and enriching the prompt with that data to deliver more relevant and accurate responses. Knowledge Bases for Amazon Bedrock automates the complete RAG workflow, including ingestion, retrieval, prompt augmentation, and citations, removing the need for you to write custom code to integrate data sources and manage queries. Content can be easily ingested from the web and from repositories such as Amazon Simple Storage Service (Amazon S3). Once the content is ingested, Amazon Bedrock Knowledge Bases divides the content into blocks of text, converts the text into embeddings, and stores the embeddings in your choice of vector database. Continuously retraining FMs can be compute-intensive and expensive. RAG addresses this issue by providing models access to external data at run-time. Relevant data is then added to the prompt to help improve both the relevance and accuracy of completions.

However, there can be some scenarios where FM fine-tuning might require aspects of explicit training. In those scenarios, Amazon SageMaker Ground Truth can be leveraged for data generation, annotation, and labeling. This can be done either using a self-service or AWS-managed offering. In the case of the managed offering, the data labeling workflows are set up and operated on your behalf. Data labeling is completed by an expert workforce. In a self-serve setup, a labeling job can be created using custom or built-in workflows, and labelers can be chosen from a group. Another option is using Amazon Comprehend for automated data extraction and annotation for natural language processing tasks. AWS Data Exchange also provides curated datasets that can be used for training and fine-tuning models.

A common pattern for storing data and information for Gen AI applications is converting documents, or chunks of documents, into vector embeddings using an embedding model and then storing these vector embeddings in a vector store/database. Customers want a vector store option that is simple to build on and enables them to move quickly from prototyping to production so they can focus on creating differentiated applications. Here are a few options to consider:

- Amazon Vector Engine for OpenSearch Serverless: The vector engine extends OpenSearch's search capabilities by enabling you to store, search, and retrieve billions of vector embeddings in real time and perform accurate similarity matching and semantic searches without having to think about the underlying infrastructure.

- Integration with existing Vector stores like Pinecone or Redis Enterprise Cloud.

- Amazon Aurora PostgreSQL and Amazon RDS with pgvector extension.

The appropriate service needs to be configured based on scalability and performance requirements.

The serverless feature of Amazon Bedrock takes away the complexity of managing infrastructure while allowing easy deployment of models through a console. For conventional ML algorithms, Amazon SageMaker also provides robust model deployment capabilities, including support for A/B testing and auto-scaling. AWS Lambda can be used for serverless model serving. Amazon ECS and Amazon EKS offer container-based deployment options for ML models, providing flexibility and scalability.

While adoption is high, many organizations are still in the early stages of realizing value from Gen AI investments.

Model Governance

Model governance covers model versioning, evaluation, registration and lineage. Model versioning enables us to compare model performance and analyze responses to better reflect business objectives. The evaluation feature of the Amazon Bedrock can be used to easily evaluate the model’s output. It also allows maintaining a central registry of models and tracks model lineage.

Model Monitoring and Implementing Guardrails

In addition to managing drift, preventing the generation of harmful or offensive content, protecting sensitive information, and managing “hallucinations” becomes a key area of focus for any enterprise deployment of Gen AI. Foundation models on Amazon Bedrock have built-in protections, but these may not fully meet an organization's specific needs or AI principles. For Model refinement, implement custom safety and privacy controls, especially when using multiple models across different use cases. This ensures consistent safeguards and a uniform approach to responsible AI while accelerating development cycles. Amazon Bedrock Guardrails implements safeguards that are customized to use cases and responsible AI policies. Multiple guardrails tailored to different use cases can be applied across multiple FMs, improving user experiences and standardizing safety controls across generative AI applications. For externally hosted or third-party FMs, the ApplyGuardrail API can be used across user input and model responses. Other services, such as Amazon SageMaker Model Monitor, automatically monitor deployed models for data and model quality drift. Amazon CloudWatch can be used for custom metric monitoring and alerting. AWS CloudTrail provides audit logs for all API calls, which can be useful for tracking model usage and performance over time.

Like any other data-driven computing application, core security disciplines such as identity and access management, data protection, privacy and compliance, application security, and threat modeling are critically important for generative AI workloads. For fine-tuning FMs, it is essential to understand the data used to train the model from ownership and quality perspectives. One option is to use a filtering mechanism on data provided through RAG with the help of Agents for Amazon Bedrock, for example, as an input to a prompt.

In addition, it is important to identify what risks apply to your generative AI workloads and how to begin to mitigate them. One mechanism to identify risk is through threat modeling by ensuring common threats such as Prompt Injection are handled. Emerging threat vectors for generative AI workloads create a new frontier for threat modeling and overall risk management practices.

AWS provides comprehensive security features, including IAM for access control, KMS for encryption, and CloudTrail for auditing. Amazon Macie can help identify sensitive data in datasets. AWS Security Hub offers a comprehensive view of security and compliance status. For ML-specific governance, SageMaker Model Cards help document model information for transparency and accountability.

Model Inference

Reducing inference costs is a major focus, involving techniques like model compression, efficient software implementations, and hardware optimization. Low cost and low latency are critical demands for Gen AI applications; this is where purpose-built infrastructure for model training and running workloads increases efficiency. AWS offers purpose-built ML infrastructure like Amazon EC2 instances powered by AWS Inferentia and AWS Trainium chips for cost-effective training and inference. There is also a choice of leveraging NVIDIA GPUs through Amazon EC2 G5 and P5 instances. Amazon EC2 P5 instances can be deployed in EC2 UltraClusters, which enable scaling up to higher GPU counts. Amazon SageMaker provides managed infrastructure for ML workloads, automatically scaling resources as needed. For engineers wanting to train foundation models faster, distributed training can be tried using Amazon SageMaker HyperPod, which reduces the time to train models by up to 40%.

The above sections provide a comprehensive view of how end-to-end LLMOps can be implemented natively using Amazon capabilities. Amazon Bedrock, SageMaker, and other AWS offerings provide a robust ecosystem for developing, deploying, and managing generative AI applications efficiently.

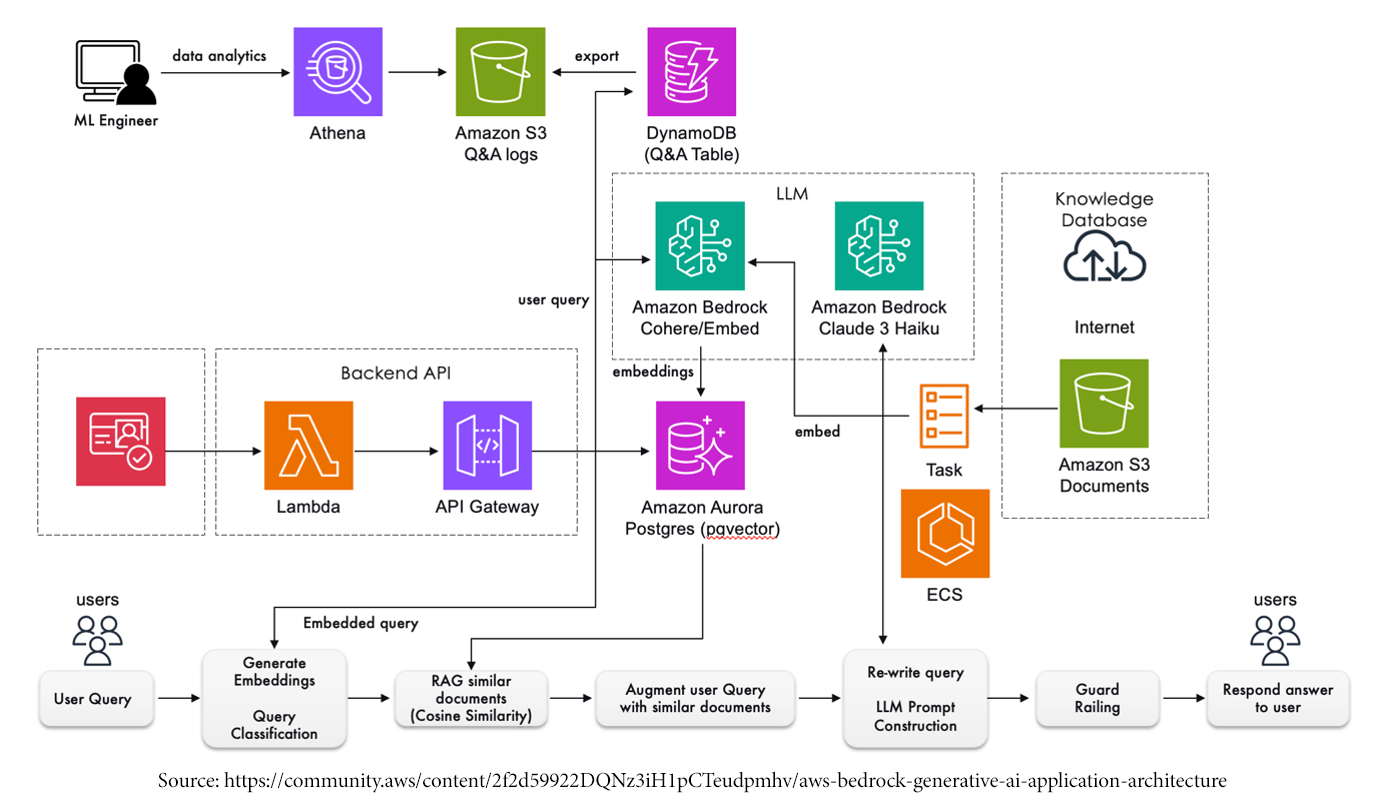

The diagram below illustrates an example of Amazon Bedrock's Reference Architecture for implementing a simple Q&A bot. It shows the workflow from user query input to AI-generated response, highlighting embedding generation, document retrieval, query rewriting, and response guardrails using AWS services such as Lambda, Bedrock Cohere/Embed, and Aurora Postgres.

The solution here involves generating embeddings for query matching and classification, using RAG to retrieve relevant documents from a vector database, and augmenting the user query with this information. The augmented query is then rewritten and used to construct a prompt for the LLM. Finally, the LLM's response undergoes guard railing before being formatted and presented to the user.

Before concluding, let’s address a key use of Implementing Autonomous AI Agents on Cloud. Adoption of AI agents is rapidly increasing across industries. AI agents are autonomous software programs that can perceive their environment, make decisions, and take actions to achieve specific goals. Amazon Bedrock provides a native interface and the capability to create fully managed agents in a few steps. Agents for Amazon Bedrock accelerate the development of these autonomous software programs by extending the capability of Foundation Models (FMs) and integrating external systems and data sources through API calls. This allows the FM that powers the agent to interact with the broader world and extend its utility beyond just language processing tasks. Agents for Amazon Bedrock can automate prompt engineering and orchestration of user-requested tasks. Once configured, an agent automatically builds the prompt and securely augments it with company-specific information to provide responses back to the user in natural language. It provides native support for AWS Lambda functions for API calls or to write any other business logic. As these are fully managed, there is no work required for provisioning or managing infrastructure.

To summarize, enterprises can rely on Amazon's capabilities to deploy Gen AI solutions at scale without spending excessive time and money assembling tools and solutions from various vendors.

Sources:

Let’s Connect

Are you ready to unleash the potential of LLMOps on your AWS tech stack? Reach out to discover how Publicis Sapient and AWS can help your business maximize the power of Gen AI and outdistance the competition.

Related Articles

-

![]()

Generative AI with Publicis Sapient and AWS

Embrace a new era within your organization by leveraging generative AI. Publicis Sapient’s partnership with AWS simplifies navigation through the generative AI landscape.

-

![]()

Publicis Sapient Achieves AWS Migration Competency Status

Recognized for expertise in cloud migration and our capability to guide clients through complex transitions, leveraging the cloud to drive business growth and innovation.

-

![]()

AskBodhi: Deploying Generative AI at Scale

AskBodhi on AWS Cloud technology is our groundbreaking solution to power Generative AI and enable you to deploy use cases in a matter of days or weeks.